What you can’t do is use individual data taken from the population to make the sort of comparisons you’d make in controlled experiments, because too many of those variables are uncontrolled.

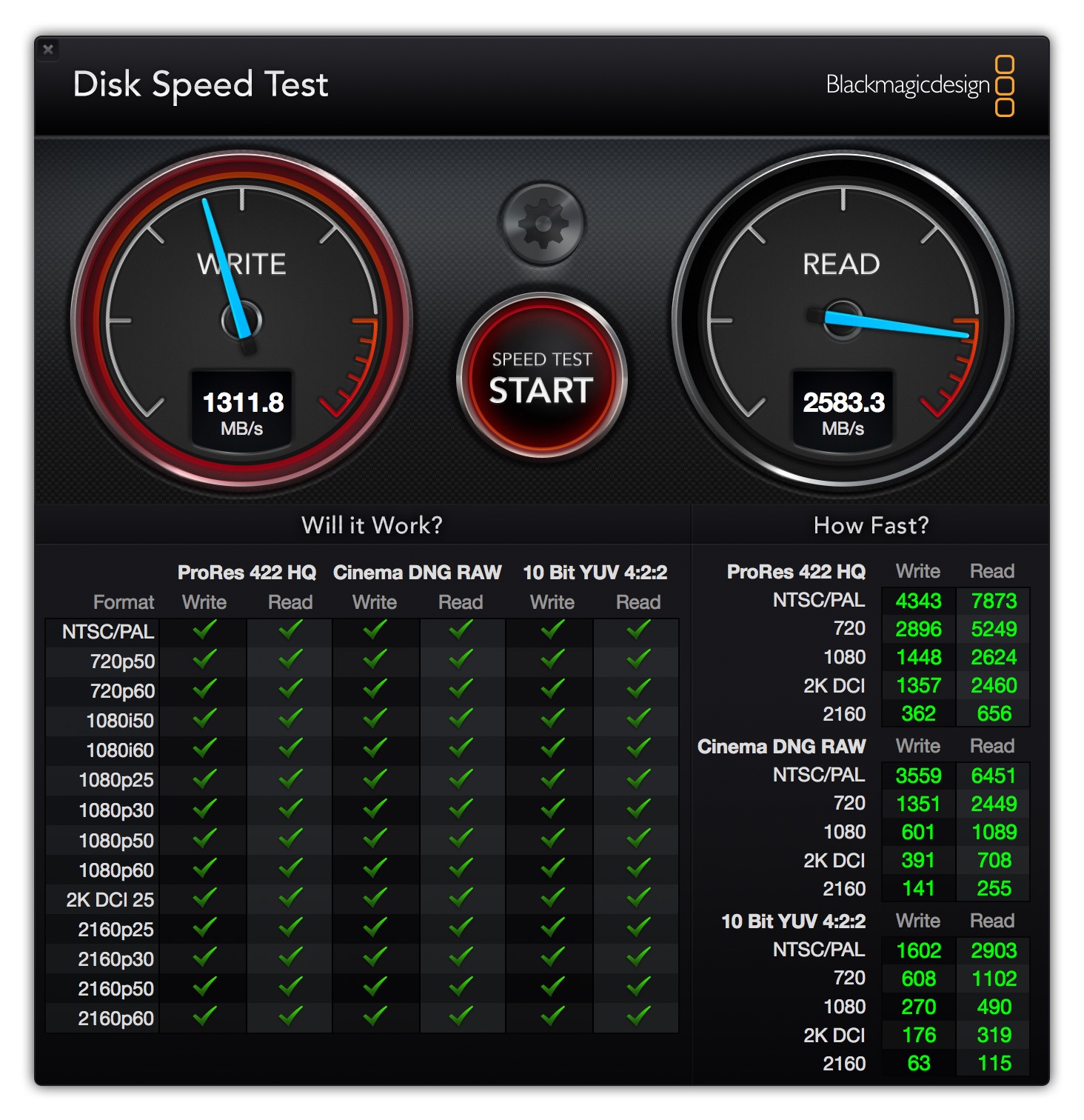

BLACKMAGIC HARD DRIVE TEST SOFTWARE

other software running which may access the disk during testing.different versions of macOS, kexts, firmware, etc.

Having decided what to benchmark, we then need to get its best estimate, either with small dispersion or known variance. And if the test doesn’t explain exactly what it does, we simply can’t trust what it’s doing.

BLACKMAGIC HARD DRIVE TEST CODE

So any benchmark which runs crafted code calling low down functions in C doesn’t tell me as much as that using standard FileHandle calls from Swift or Objective-C. That’s important, because some benchmarks use quite different code from that normally used by apps, and features in storage can also be tuned to deliver better benchmark results even though in real use they’re slower. What I want to know, though, is how fast storage will be when in use, typically doing mundane tasks like reading and saving files, and when copying in the Finder. For those in the trade, it’s to show how fast their product is compared with their competitors. For some, it’s to prove that their purchase choice performs better than those of others. We run benchmark tests for different reasons. This article explains some of the difficulties in interpreting this avalanche of data, and how we can move forward. Over the last couple of weeks, I’ve read more benchmarks and other performance measurements on SSDs than I’ve ever seen before, thanks to so many of you who have contributed results from your own tests.

0 kommentar(er)

0 kommentar(er)